How to Tar/Untar container layers in Go

Tar is archival, or “container” format, meaning it does not provide compression. People use additional tools like Bzip2 or Gzip to compress it.

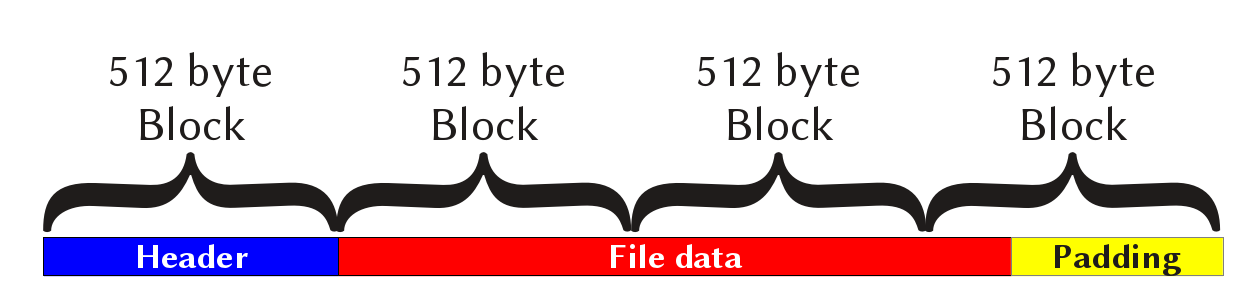

Logically speaking, a tar file is linear sequence of entries. An entry consists of a header and file body. Physically, a tar file consists of a sequence of zero-padded fixed-size blocks.

Code is located on github.

How to Tar a directory

Instead of writing an archive directly to a file let’s use io.Pipe func to create a synchronous in-memory pipe. This will provide us with more flexibility later when we need to calculate a digest.

import "io"

pipeReader, pipeWriter := io.Pipe()

As mentioned earlier tar is an archival format, so we need to compress it separately. Go provides compress/gzip package to handle compression.

import "compress/gzip"

compressed := gzip.NewWriter(pipeWriter), nil

Finally we create a tar writer.

import "archive/tar"

tr := tar.NewWriter(compressed)

Now it’s a matter of using filepath.WalkDir to list all the files in a directory and add them to our archive. However, there is one caveat - we must add files to the archive in a goroutine. This is because PipeWriter blocks until it has satisfied one or more reads from the PipeReader. If no one reads from the pipe, the write operation will block.

filepath.WalkDir accepts a source directory to walk and a function to call for each file or directory in the filesystem tree.

In order to populate a tar archive we need to do two things:

- Call

tr.WriteHeaderto write file metadata. - Write the file itself to

tr.

Header is a structure that contains metadata about the file:

- file name,

- permission and mode bits,

- size,

- owner’s user id and group id,

- checksum, file type, etc.

archive/tar package also provides a helper function tar.FileInfoHeader to create header struct from fs.FileInfo object.

go func(){

if err := filepath.WalkDir(src, func(path string, d os.DirEntry, err error) error {

if err != nil {

return fmt.Errorf("walk: %w", err)

}

relPath, err := filepath.Rel(src, path)

if err != nil {

return fmt.Errorf("rel: %w", err)

}

if relPath == "." {

return nil

}

fi, err := os.Lstat(path)

if err != nil {

return fmt.Errorf("lstat: %w", err)

}

var link string

if fi.Mode()&os.ModeSymlink != 0 {

link, err = os.Readlink(path)

if err != nil {

return fmt.Errorf("readlink: %w", err)

}

}

header, err := tar.FileInfoHeader(fi, link)

if err != nil {

return fmt.Errorf("header: %w", err)

}

header.Name = relPath

if err := tr.WriteHeader(header); err != nil {

return fmt.Errorf("write header: %w", err)

}

if header.Typeflag == tar.TypeReg {

file, err := os.Open(path)

if err != nil {

return fmt.Errorf("open: %w", err)

}

_, err = io.Copy(tr, file)

file.Close()

if err != nil {

return fmt.Errorf("copy: %w", err)

}

}

return nil

}); err != nil {

fmt.Println(err)

}

}()

I won’t post the full source code here as it’s too big, but you can find it on github, link is at the top.

Now, in order to write an archive to a file we can io.Copy the pipe reader. Assuming our tar-ing function is called Tar and it returns a pipe reader we can use the following code:

import (

"os"

"io"

)

var src = "/var/tmp/nginx"

var dst = "nginx.tar.gz"

file, err := os.Create(dst)

if err != nil {

panic("creating dst file")

}

reader, err := Tar(src)

if err != nil {

panic("creating dst file")

}

if _, err := io.Copy(file, reader); err != nil {

return err

}

reader.Close()

file.Close()

We can also use go-digest package to compute the archive’s digest while we saving it.

import (

"io"

"github.com/opencontainers/go-digest"

)

compressedDigester := digest.Canonical.Digester()

...

mWriter := MultiWriter(compressedDigester.Hash(), file)

...

if _, err := io.Copy(mWriter, reader); err != nil {

return err

}

Here, instead of writing the data directly to the file, we use an io.MultiWriter instance to write it to both the file and our hashing object.

How to Untar an archive

In order to unpack an archive we need to know how it was compressed. We can find the compression method by inspecting the first bytes of the archive.

- Zip (.zip) starts with 0x50, 0x4b, 0x03, 0x04

- Gzip (.gz) starts with 0x1f, 0x8b, 0x08

- bzip2 (.bz2) starts with 0x42, 0x5a, 0x68

type Compression int

const (

Uncompressed Compression = iota

Bzip2

Gzip

)

func DetectCompression(source []byte) Compression {

for compression, m := range map[Compression][]byte{

Bzip2: {0x42, 0x5A, 0x68},

Gzip: {0x1F, 0x8B, 0x08},

} {

if len(source) < len(m) {

continue

}

if bytes.Equal(m, source[:len(m)]) {

return compression

}

}

return Uncompressed

}

The DecompressStream func take io.Reader instance, detects file compression and creates the appropriate reader.

import (

"io"

"bufio"

"bytes"

"compress/bzip2"

"compress/gzip"

)

func DecompressStream(src io.Reader) (io.Reader, Compression, error) {

buffer := bufio.NewReader(src)

sig, err := buffer.Peek(10)

if err != nil {

return nil, Uncompressed, err

}

compression := DetectCompression(sig)

switch compression {

case Uncompressed:

return buffer, Uncompressed, nil

case Bzip2:

return bzip2.NewReader(buffer), Bzip2, err

case Gzip:

gzipReader, err := gzip.NewReader(buffer)

if err != nil {

return nil, Gzip, err

}

return gzipReader, Gzip, nil

default:

return nil, Uncompressed, fmt.Errorf("unsupported compression: %d", compression)

}

}

The untarring function is simple, so I’ll include its full code. We first start by decompressing the input stream, an io.Reader instance. We then instantiate a new tar reader by calling tar.NewReader. Afterwards, we call tr.Next in a loop to move to the next file in the archive.

import (

"os"

"io"

"archive/tar"

"path/filepath"

"strings"

)

func Untar(src io.Reader, dst string) error {

decompressed, _, err := DecompressStream(src)

if err != nil {

return err

}

tr := tar.NewReader(decompressed)

for {

header, err := tr.Next()

if err == io.EOF {

break

}

if err != nil {

return fmt.Errorf("tar read: %w", err)

}

header.Name = filepath.Clean(header.Name)

path := filepath.Join(dst, header.Name)

fi := header.FileInfo()

mask := fi.Mode()

switch header.Typeflag {

case tar.TypeDir:

if fi, err := os.Lstat(path); !(err == nil && fi.IsDir()) {

if err := os.MkdirAll(path, mask); err != nil {

return fmt.Errorf("mkdir: %w", err)

}

}

case tar.TypeReg:

file, err := os.OpenFile(path, os.O_CREATE|os.O_WRONLY, mask)

if err != nil {

return fmt.Errorf("open: %w", err)

}

if _, err := io.Copy(file, tr); err != nil {

file.Close()

return fmt.Errorf("copy: %w", err)

}

file.Close()

case tar.TypeSymlink:

targetPath := filepath.Join(filepath.Dir(path), header.Linkname)

if !strings.HasPrefix(targetPath, dst) {

return fmt.Errorf("symlink: %w", err)

}

if err := os.Symlink(header.Linkname, path); err != nil {

return fmt.Errorf("symlink: %w", err)

}

default:

return fmt.Errorf("unsupported type: %d", header.Typeflag)

}

}

return nil

}

This post is part of a series.

- Part 1: Container build tool

- Part 2: How-to build OCI Image by hands

- Part 3: Building OCI images with Go. No run command yet

- Part 4: How to Tar/Untar container layers in Go

- Part 5: Linux kernel namespaces

- Part 6: Mini container runtime in Go

- Part 7: Union mount